Recently I had the privilege to work on a project where I had to implement AWS Datapipeline. Reading through the documentation, it was not that difficult to understand the basic concepts. As stated in the AWS Datapipeline homepage:

AWS Data Pipeline is a web service that helps you reliably process and move data between different AWS compute and storage services, as well as on-premise data sources, at specified intervals.

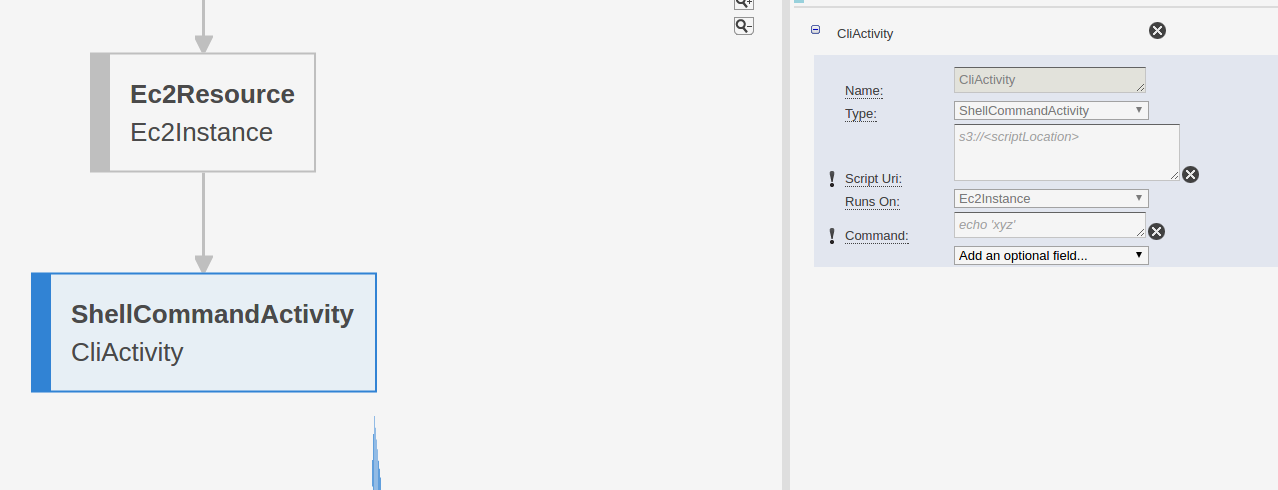

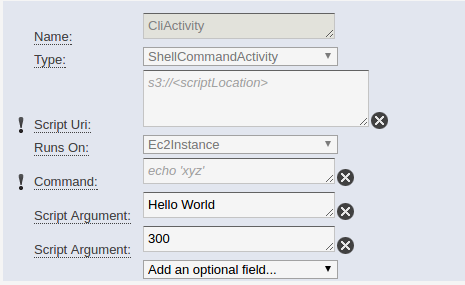

Basically, a datapipeline will allow you to run an EC2 instance using scheduled intervals or on-demand activation so as to manipulate data and be able to transfer the output to other AWS services. For the project that I worked on, I simply had to run a datapipeline so that it will run a shell script to get data from AWS S3, process those data, then upload the processed data back to AWS S3. Going through the documentation, you will see two ways on how you can pass user-data to the generated instance when the pipeline is activated. First is via the command field and the second is the Script Uri. Though both can not exist at the same time in your configuration, you have to choose only one.

Passing parameters

Based on the documentation, you will learn that it is not possible to pass parameters to a shell script provided in the Script Uri field and that you should use Command field instead. I was disappointed in this because my shell script needs to take in two arguments in order for it to execute completely. Luckily, there IS a way to pass parameters to the script file and it is very easy.

Just add another optional field, Script Argument. Via the documentation, this is only used for Command field; but I have learned that we can also use this for the Script Uri. And in your shell script file, you can access the values using $n where n is equal to number of the script argument in the order. In case of the example above, $1 will have a value of "Hello World" while $2 will have a value of 300.

That's it! Hopefully this will help someone in there quest to master the Datapipeline. And that AWS should update there documentation so as not to give it's users a hard time with such cases.

投稿者プロフィール

最新の投稿

AWS2021年12月2日AWS Graviton3 プロセッサを搭載した EC2 C7g インスタンスが発表されました。

AWS2021年12月2日AWS Graviton3 プロセッサを搭載した EC2 C7g インスタンスが発表されました。 セキュリティ2021年7月14日ゼロデイ攻撃とは

セキュリティ2021年7月14日ゼロデイ攻撃とは セキュリティ2021年7月14日マルウェアとは

セキュリティ2021年7月14日マルウェアとは WAF2021年7月13日クロスサイトスクリプティングとは?

WAF2021年7月13日クロスサイトスクリプティングとは?